Python: Use a JSON-formatted array of strings to specify parameters. Conforming to the Apache Spark spark-submit convention, parameters after the JAR path are passed to the main method of the main class. Spark Submit task: Parameters are specified as a JSON-formatted array of strings. These strings are passed as arguments to the main method of the main class. JAR: Use a JSON-formatted array of strings to specify parameters. Use task parameter variables to pass a limited set of dynamic values as part of a parameter value. Parameters set the value of the notebook widget specified by the key of the parameter. You can override or add additional parameters when you manually run a task using the Run a job with different parameters option. Notebook: Click Add and specify the key and value of each parameter to pass to the task. Each task type has different requirements for formatting and passing the parameters. To learn more about selecting and configuring clusters to run tasks, see Cluster configuration tips. To open the cluster in a new page, click the icon to the right of the cluster name and description. New Job Cluster: Click Edit in the Cluster drop-down and complete the cluster configuration.Įxisting All-Purpose Cluster: Select an existing cluster in the Cluster drop-down. In the Cluster drop-down, select either New Job Cluster or Existing All-Purpose Clusters. Click Add under Dependent Libraries to add libraries required to run the task.Ĭonfigure the cluster where the task runs. In the Entry Point text box, enter the function to call when starting the wheel. Python Wheel: In the Package name text box, enter the package to import, for example, 圓-none-any.whl. Pipeline: In the Pipeline drop-down, select an existing Delta Live Tables pipeline. Python: In the Path textbox, enter the URI of a Python script on DBFS or cloud storage for example, dbfs:/FileStore/myscript.py. To use Databricks Utilities, use JAR tasks instead. Spark-submit does not support Databricks Utilities. To learn more about autoscaling, see Cluster autoscaling. Spark-submit does not support cluster autoscaling. You can run spark-submit tasks only on new clusters. There are several limitations for spark-submit tasks: Performs tasks in parallel to persist the features and train a machine learning model. Ingests order data and joins it with the sessionized clickstream data to create a prepared data set for analysis.Įxtracts features from the prepared data. Ingests raw clickstream data and performs processing to sessionize the records. The following diagram illustrates a workflow that: You can configure tasks to run in sequence or parallel. You control the execution order of tasks by specifying dependencies between the tasks. Legacy Spark Submit applications are also supported.

You can implement a task in a JAR, a Databricks notebook, a Delta Live Tables pipeline, or an application written in Scala, Java, or Python. You can run your jobs immediately or periodically through an easy-to-use scheduling system.

Databricks manages the task orchestration, cluster management, monitoring, and error reporting for all of your jobs. Your job can consist of a single task or can be a large, multi-task workflow with complex dependencies. For the other methods, see Jobs CLI and Jobs API 2.1. This article focuses on performing job tasks using the UI. You can monitor job run results using the UI, CLI, API, and email alerts. You can repair and re-run a failed or canceled job using the UI or API. You can create and run a job using the UI, the CLI, or by invoking the Jobs API. You can also run jobs interactively in the notebook UI. For example, you can run an extract, transform, and load (ETL) workload interactively or on a schedule.

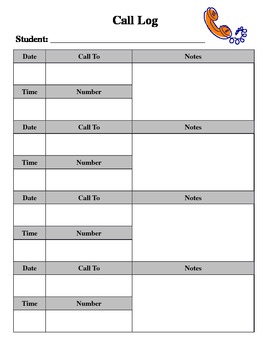

Add tasklog reord code#

A job is a way to run non-interactive code in a Databricks cluster.

0 kommentar(er)

0 kommentar(er)